USE CASE |

|

| Title: | Text Classification Using ESA and SVM |

| Short Description: | KBpedia

makes it easy to set up a supervised machine learner (SVM) to classifly

arbitrary text into desired domain classes using a semantic vector

representation (ESA) of the subject domain.

|

| Problem: | We have arbitrary input text that we need to classify in ways that are of interest to us, from topics to sentiment, that we can also reason over. |

| Approach: | The KBpedia knowledge structure allows us to create labeled and domain-specific corpuses for training supervised, unsupervised and deep learning models. We can capture the semantic representation of our content using a variety of vector methods. In this use case, we use the supervised support vector machine (SVM) learner coupled with an explicit semantic analysis (ESA) representation of input content to create a binary classification model of high (97+%) accuracy. The creation of accurate domain and class-specific training sets is rapid (minutes), enabling the method's application to multi-class learners and to focusing on refining learner parameters for maximum performance. |

| Key Findings |

|

A common task required by systems that automatically analyze text is to classify arbitrary input text into one or multiple classes. To achieve this task a model needs to be created that scopes the class (what belongs to it and what does not) and then a classification algorithm uses this model to classify the input text.

Multiple classification algorithms exist to perform such a task such as Support Vector Machines (SVM), K-Nearest Neighbor (KNN), the C4.5 algorithm and others. Applying one of these algorithms is not the hard part of the task; each of these approaches is generally easy to configure and most have implementations in a variety of programming languages. The hard part – which is also time-consuming – is to create a sound training corpus that will properly define the class you want to predict. Further, the steps required to create such a training corpus must be duplicated for each class you want to predict.

In this use case, we show how the KBpedia knowledge structure can be used to automatically generate training corpuses for classification models. The use case proceeds through a number of steps:

- First, we define (scope) a domain with one or multiple KBpedia reference concepts

- Second, we aggregate the training corpus for that domain using the KBpedia knowledge structure and its linkages to external public datasets, which are then used to populate the training corpus of the domain

- Third, we use the Explicit Semantic Analysis (ESA) algorithm to create a vectorial representation of the training corpus

- Fourth, we create a model using (in this use case) an SVM classifier, and

- Finally, we predict if an input text belongs to the class (scoped domain) or not.

Note we are using a supervised machine learning approach (SVM) based on a semantic vector representation of the concepts (ESA) as its feature set. This use case can be used in any workflow that needs to pre-process any set of input texts where the objective is to classify relevant documents into a defined domain.

Unlike more traditional topic taggers where topics are tagged in the input text with weights, we demonstrate how it is possible to use the semantic interpreter to tag main concepts related to an input text even if the surface form of the topic is not mentioned in the text. We accomplish this by leveraging ESA's semantic interpreter.

General and Specific Domains

Two corpuses are created in this use case: the general domain and the specific domain(s). The general domain is the potential set of all specific domains. The specific domain is one or multiple classes that scope a domain of interest; as such, it is a subset of the general domain.

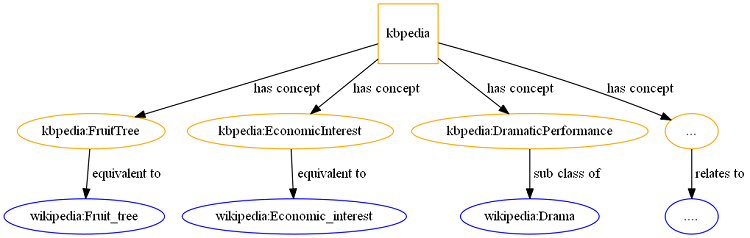

In KBpedia, the general domain is defined by all the ~55,000 KBpedia reference concepts. A specific domain is any sub-set of these that adequately scopes a domain of interest. These concepts also provide the entry into 30 million entities and supporting information mapped to these concepts. Via the combined KBpedia knowledge structure, it is possible to acquire sufficient labeled content for most any supervised learner for a multitude of domains, and well-bounded training corpuses for unsupervised and deep learners.

The purpose of this use case is to show how we can determine if an arbitrary input text belongs to a specific domain of interest. What we have to do is to create two training corpuses: one that defines the general domain, and one that defines the specific domain. The common approach is to create these training sets manually, which takes much time with high cost. This labeling or scoping requirement is the Achille's Heel of any machine learner.

The advantage arises from the ability to leverage the KBpedia knowledge graph to automatically generate the reference concepts underlying the general and specific domains. Via the linkages of KBpedia to its constituent knowledge bases and their 30 million entities, we can also generate labeled training sets and domain corpuses sufficient to drive virtually any supervised or unsupervised machine learner. We can also rapidly create "gold standards" for testing different models, algorithms, or parameters. These are the steps that provide the real performance improvements.

Training Corpuses

The first step is to define the general and domain training corpuses for the semantic interpreter and the SVM classification models. The example domain for this use case is Music, as enhanced by the concepts of Musicians, Music Records, Musical Groups, Musical Instruments, etc.

Define The General Training Corpus

The general training corpus is quite easy to create. We do so by including all of the Wikipedia pages linked to the KBpedia reference concepts. These pages will become the general training corpus. (The same approach could be applied to other general documents.)

Note how easy this is made by the KBpedia structure, in that we only need query it and then write the general corpus into a CSV file. This CSV file will be used later for most of the subsequent tasks.

(define-general-corpus "resources/kbpedia_reference_concepts_linkage.n3" "resources/general-corpus-dictionary.csv")

Define The Specific Domain Training Corpus

The next step is to define the training corpuse of the specific domain for this use case, the music domain. To do so, we now will use the broader KBpedia structure to search for all of the reference concepts relevant to the music domain. These domain-specific KBpedia reference concepts provide the features set for the SVM models we will test below.

What the define-domain-corpus function below does is simply to query KBpedia to get all of the Wikipedia articles related to these concepts, their sub-classes and to create the training corpus from them.

Our use case is based on a binary classifier. However, we could create a multi-class classifier by defining multiple specific domain training corpuses in exactly the same way. The only additional time required would be to search KBpedia to find the reference concepts we want for the additional desired classes.

(define-domain-corpus ["http://kbpedia.org/kko/rc/Music" "http://kbpedia.org/kko/rc/Musician" "http://kbpedia.org/kko/rc/MusicPerformanceOrganization" "http://kbpedia.org/kko/rc/MusicalInstrument" "http://kbpedia.org/kko/rc/Album-CW" "http://kbpedia.org/kko/rc/Album-IBO" "http://kbpedia.org/kko/rc/MusicalComposition" "http://kbpedia.org/kko/rc/MusicalText" "http://kbpedia.org/kko/rc/PropositionalConceptualWork-MusicalGenre" "http://kbpedia.org/kko/rc/MusicalPerformer"] "resources/kbpedia_reference_concepts_linkage.n3" "resources/domain-corpus-dictionary.csv")

Create Training Corpuses

Once the training corpuses are defined, we want to cache them locally to be able to play with them, without having to re-download them from the Web or re-create them each time.

(cache-corpus)

The cache is composed of 24,374 Wikipedia pages, which is about 2 GB of raw data. However, we have some more processing to perform on the raw Wikipedia pages since what we ultimately want is a set of relevant tokens (words) that will be used to calculate the value of the features of our model using the ESA semantic interpreter. Since we may want to experiment with different normalization rules, what we do is to re-write each document of the corpus in another folder that we will be able to re-create as required if the normalization rules change in the future. We can quickly re-process these input files and save them in separate folders for testing and comparative purposes.

The normalization steps performed by this function are to:

- Defluff the raw HTML page. We convert the HTML into text, and we only keep the body of the page

- Normalize the text with the following rules:

- Remove diacritics characters

- Remove everything between brackets like: [edit] [show]

- Remove punctuation

- Remove all numbers

- Remove all invisible control characters

- Remove all [math] symbols

- Remove all words with 2 characters or fewer

- Remove line and paragraph seperators

- Remove anything that is not an alpha character

- Normalize spaces

- Put everything in lower case, and

- Remove stop words.

Normalization steps could be dropped or others included, but these are the standard ones that might be applied in a baseline configuration.

(normalize-cached-corpus "resources/corpus/" "resources/corpus-normalized/")

After cleaning, the size of the cache is now 208M (instead of the initial 2G for the raw web pages).

Note that unlike what is discussed in the original ESA research papers by Evgeniy Gabrilovich we are not pruning any pages (the ones with less than X number of tokens, etc.) This could be done but at a subsequent tweaking step, which our results below indicate is not really necessary.

Now that the training corpuses are created we can now build the semantic interpreter to create the vectors that will be used to train the SVM classifier.

Build Semantic Interpreter

What we want to do is to classify (determine) if an input text belongs to a class as defined by a domain. The relatedness of the input text is based on how closely the specific domain corpus is related to the general one. This classification is performed with some classifiers like SVM, KNN and C4.5. However, each of these algorithms needs to use some kind of numerical vector, upon which the actual classifier models and classifies the candidate input text. Creating this numeric vector is the job of the ESA Semantic Interpreter.

Let's dive a little further into the Semantic Interpreter to understand how it operates. Note that you can skip the next section and continue with the following one.

How Does the Semantic Interpreter Work?

The Semantic Interpreter is a process that maps fragments of natural language into a weighted sequence of text concepts ordered by their relevance to the input.

Each concept in the domain is accompanied by a document from the KBpedia Knowledge Graph, which acts as its representative term set to capture the idea (meaning) of the concept. The overall corpus is based on the combined documents from KBpedia that match the slice retrieved from the knowledge graph based on the domain query(ies).

The corpus is composed of  concepts that come from the domain ontology associated with

concepts that come from the domain ontology associated with  KBpedia knowledge structure documents. We build a sparse matrix

KBpedia knowledge structure documents. We build a sparse matrix  where each of the

where each of the  columns corresponds to a concept and where each of the rows corresponds to a word that occurs in the related entity documents

columns corresponds to a concept and where each of the rows corresponds to a word that occurs in the related entity documents  . The matrix entry

. The matrix entry ![\(T \left[i,j\right]\)](ltxpng/text-classification-esa-svm_f287d2a003c9ea9409a8204c923e4e4aaeb31558.png) is the TF-IDF value of the word

is the TF-IDF value of the word  in document

in document  .

.

![\[

T=

\left[ {\begin{array}{cccccc}

& C\_{1} & C\_{2} & C\_{3} & ... & C\_{j} \\\\

w\_{1} & 0 & 0 & 0 & ... & 0\\\\

w\_{2} & 0 & 0.9 & 0 & ... & 2.34\\\\

... & 0 & 0 & 0 & ... & 0\\\\

w_{i} & 0 & 1.2 & 0 & ... & 0\\\\

\end{array} } \right]

\]](ltxpng/text-classification-esa-svm_15694bce42dc50920f98a49030daad4ff3c85ae0.png)

The TF-IDF value of a given term is calculated as:

![\[

T \left[i,j\right] = tf(t\_{i},d\_{j}) \cdot log \frac{n}{ 1 + df_{i}}

\]](ltxpng/text-classification-esa-svm_0bb23280e5a8be39baf5a6637d2cd49801ab9d2b.png)

where  is the number of words in the document

is the number of words in the document  , where the term frequency is defined as:

, where the term frequency is defined as:

![\[

tf(t\_{i},d\_{j}) = \left\{

\begin{array}{l l}

1+log \textit{ count}(t\_{i},d\_{j}), & \textit{if } count(t\_{i},d\_{j}) > 0\\\\

0, & otherwise

\end{array} \right.\

\]](ltxpng/text-classification-esa-svm_ce8e3a814bb5e034cdea09c5fcc994df62b7e3e9.png)

and where the document frequency  is the number of documents where the term

is the number of documents where the term  appears.

appears.

Unlike the standard ESA system, pruning is not performed on the matrix to remove the least-related concepts for any given word. We are not doing the pruning due to the fact that the ontologies are highly domain specific as opposed to really broad and general vocabularies. However, with a different mix of training text, and depending on the use case, the stardard ESA model may benefit from pruning the matrix.

Once the matrix is created, we do perform cosine normalization on each column:

![\[

T \left[i,j\right] = \frac{T \left[i,j\right] }{\sqrt{\sum\_{l=1}^{r} T \left[l,j\right]^{2} } }

\]](ltxpng/text-classification-esa-svm_9dd1ea666bd4fde0874ee9aaa00e9c5c3f0e4325.png)

where ![\(T\left[i,j\right]\)](ltxpng/text-classification-esa-svm_9b3449aa2048b4d8b80316d793e20d6cbbf0a588.png) is the TF-IDF weight of the word

is the TF-IDF weight of the word  in the concept document

in the concept document  , where

, where ![\(\sqrt{\sum\_{l=1}^{r} T \left[l,j\right]^{2}}\)](ltxpng/text-classification-esa-svm_df5ef8f1a0a99c333958e31956bc99cf67d3da0b.png) is the square root of the sum of exponent of the TF-IDF weight of each word

is the square root of the sum of exponent of the TF-IDF weight of each word  in document

in document  . This normalization removes, or at least lowers, the effect of the length of the input documents.

. This normalization removes, or at least lowers, the effect of the length of the input documents.

Creating the First Semantic Interpreter

The first semantic interpreter is composed of the general corpus, which has 24,374 Wikipedia pages, and the music domain-specific corpus, composed of 62 Wikipedia pages. The 62 Wikipedia pages that compose the music domain corpus come from the selected KBpedia reference concepts and their sub-classes that we defined in the Define The Specific Domain Training Corpus section above.

(load-dictionaries "resources/general-corpus-dictionary.csv" "resources/domain-corpus-dictionary--base.csv") (build-semantic-interpreter "base" "resources/semantic-interpreters/base/" (distinct (concat (get-domain-pages) (get-general-pages))))

Evaluating Models

Before building the SVM classifier, we have to create a gold standard that we will use to evaluate the performance of the models we will test. This is a standard step with all of Cognonto's machine learners.

For this use case, we aggregate a list of news feeds from the CBC and from Reuters and then crawl each of them to get the news content. Each news feed document is manually classified. The result is a gold standard of 336 news pages, classified as being related to the music domain or not (the basis for a binary classifier). It can be downloaded from here. Because of the logical structuring within KBpedia, we can classify these feeds in minutes.

For comparative purposes, we also created a second gold standard that has 345 new spages. It can be downloaded from here. We use both to evaluate the different SVM models below.

Both gold standards get created this way:

(defn create-gold-standard-from-feeds [name] (let [feeds ["http://rss.cbc.ca/lineup/topstories.xml" "http://rss.cbc.ca/lineup/world.xml" "http://rss.cbc.ca/lineup/canada.xml" "http://rss.cbc.ca/lineup/politics.xml" "http://rss.cbc.ca/lineup/business.xml" "http://rss.cbc.ca/lineup/health.xml" "http://rss.cbc.ca/lineup/arts.xml" "http://rss.cbc.ca/lineup/technology.xml" "http://rss.cbc.ca/lineup/offbeat.xml" "http://www.cbc.ca/cmlink/rss-cbcaboriginal" "http://rss.cbc.ca/lineup/sports.xml" "http://rss.cbc.ca/lineup/canada-britishcolumbia.xml" "http://rss.cbc.ca/lineup/canada-calgary.xml" "http://rss.cbc.ca/lineup/canada-montreal.xml" "http://rss.cbc.ca/lineup/canada-pei.xml" "http://rss.cbc.ca/lineup/canada-ottawa.xml" "http://rss.cbc.ca/lineup/canada-toronto.xml" "http://rss.cbc.ca/lineup/canada-north.xml" "http://rss.cbc.ca/lineup/canada-manitoba.xml" "http://feeds.reuters.com/news/artsculture" "http://feeds.reuters.com/reuters/businessNews" "http://feeds.reuters.com/reuters/entertainment" "http://feeds.reuters.com/reuters/companyNews" "http://feeds.reuters.com/reuters/lifestyle" "http://feeds.reuters.com/reuters/healthNews" "http://feeds.reuters.com/reuters/MostRead" "http://feeds.reuters.com/reuters/peopleNews" "http://feeds.reuters.com/reuters/scienceNews" "http://feeds.reuters.com/reuters/technologyNews" "http://feeds.reuters.com/Reuters/domesticNews" "http://feeds.reuters.com/Reuters/worldNews" "http://feeds.reuters.com/reuters/USmediaDiversifiedNews"]] (with-open [out-file (io/writer (str "resources/" name ".csv"))] (csv/write-csv out-file [["class" "title" "url"]]) (doseq [feed-url feeds] (doseq [item (:entries (feed/parse-feed feed-url))] (csv/write-csv out-file "" (:title item) (:link item) :append true))))))

Each of the different models we test in the next sections is evaluated using the following functions:

(defn evaluate-model [evaluation-no gold-standard-file] (let [gold-standard (rest (with-open [in-file (io/reader gold-standard-file)] (doall (csv/read-csv in-file)))) true-positive (atom 0) false-positive (atom 0) true-negative (atom 0) false-negative (atom 0)] (with-open [out-file (io/writer (str "resources/evaluate-" evaluation-no ".csv"))] (csv/write-csv out-file [["class" "title" "url"]]) (doseq [[class title url] gold-standard] (when-not (.exists (io/as-file (str "resources/gold-standard-cache/" (md5 url)))) (spit (str "resources/gold-standard-cache/" (md5 url)) (slurp url))) (let [predicted-class (classify-text (-> (slurp (str "resources/gold-standard-cache/" (md5 url))) defluff-content))] (println predicted-class " :: " title) (csv/write-csv out-file [[predicted-class title url]] :append true) (when (and (= class "1") (= predicted-class 1.0)) (swap! true-positive inc)) (when (and (= class "0") (= predicted-class 1.0)) (swap! false-positive inc)) (when (and (= class "0") (= predicted-class 0.0)) (swap! true-negative inc)) (when (and (= class "1") (= predicted-class 0.0)) (swap! false-negative inc)))) (println "True positive: " @true-positive) (println "false positive: " @false-positive) (println "True negative: " @true-negative) (println "False negative: " @false-negative) (println) (let [precision (float (/ @true-positive (+ @true-positive @false-positive))) recall (float (/ @true-positive (+ @true-positive @false-negative)))] (println "Precision: " precision) (println "Recall: " recall) (println "Accuracy: " (float (/ (+ @true-positive @true-negative) (+ @true-positive @false-negative @false-positive @true-negative)))) (println "F1: " (float (* 2 (/ (* precision recall) (+ precision recall)))))))))

What these functions do is to calculate the number of true-positive, false-positive, true-negative and false-negatives scores within the gold standard by applying the current model, and then to calculate the precision, recall, accuracy and F1 metrics. You can read more about how binary classifiers may be evaluated from here.

Build SVM Model

Now that we have numeric vector representations of the music domain and a way to evaluate the quality of the models we will be creating, we can now create and evaluate our prediction models.

The classification algorithm we use for this use case is the Support Vector Machine (SVM). We use the Java port of the LIBLINEAR library. Let's create the first SVM model:

(build-svm-model-vectors "resources/svm/base/") (train-svm-model "svm.w0" "resources/svm/base/" :weights nil :v nil :c 1 :algorithm :l2l2)

This initial model is created using a training set that is composed of 24,311 documents that do not belong to the specific class (that is, the music domain), and 62 documents that do belong to that class.

Now, let's evaluate how this initial model perform against the the two gold standards:

(evaluate-model "w0" "resources/gold-standard-1.csv" )

True positive: 5 False positive: 0 True negative: 310 False negative: 21 Precision: 1.0 Recall: 0.1923077 Accuracy: 0.9375 F1: 0.32258064

(evaluate-model "w0" "resources/gold-standard-2.csv" )

True positive: 2 false positive: 1 True negative: 319 False negative: 23 Precision: 0.6666667 Recall: 0.08 Accuracy: 0.93043476 F1: 0.14285713

This first run looks to be really poor! The issue here is a common one with how the SVM classifier is being used. Ideally, the number of documents that belong to the class and the number of documents that do not belong to the class should be about the same. However, because of the way we defined the music specific domain, and because of the way we created the training corpuses, we ended up with two really unbalanced sets of training documents: 24,311 that do not belong to the class and only 62 that do. This sample imbalance is one reason why we are getting such poor results.

What can we do from here? We have two possibilities:

- We use LIBLINEAR's weight modifier parameter to modify the weight of the terms that exists in the 62 documents that belong to the class. Because the two sets are so unbalanced, the weight should theoretically be around 386, or

- We add thousands of new documents that belong to the class we want to predict.

Let's test both options. We will initially play with the weights to see how much we can improve the current situation.

Improving Performance Using Weights

What we will do now is to create a series of models that will differ in the weight we will define to improve the weight of the classified terms in the SVM process.

Weight 10

(train-svm-model "svm.w10" "resources/svm/base/" :weights {1 10.0} :v nil :c 1 :algorithm :l2l2) (evaluate-model "w10" "resources/gold-standard-1.csv")

True positive: 17 False positive: 1 True negative: 309 False negative: 9 Precision: 0.9444444 Recall: 0.65384614 Accuracy: 0.9702381 F1: 0.77272725

(evaluate-model "w10" "resources/gold-standard-2.csv")

True positive: 15 False positive: 2 True negative: 318 False negative: 10 Precision: 0.88235295 Recall: 0.6 Accuracy: 0.9652174 F1: 0.71428573

This is already a clear improvement for both gold standards. Let's see if we continue to see improvements if we continue to increase the weight.

Weight 25

(train-svm-model "svm.w25" "resources/svm/base/" :weights {1 25.0} :v nil :c 1 :algorithm :l2l2) (evaluate-model "w25" "resources/gold-standard-1.csv")

True positive: 20 False positive: 3 True negative: 307 False negative: 6 Precision: 0.8695652 Recall: 0.7692308 Accuracy: 0.97321427 F1: 0.8163265

(evaluate-model "w25" "resources/gold-standard-2.csv")

True positive: 21 False positive: 5 True negative: 315 False negative: 4 Precision: 0.8076923 Recall: 0.84 Accuracy: 0.973913 F1: 0.82352936

The general metrics continue to improve. By increasing the weight, the precision dropped a little bit, but the recall improved quite a bit. The overall F1 score significantly improved. Let's see with the Weight at 50.

Weight 50

(train-svm-model "svm.w50" "resources/svm/base/" :weights {1 50.0} :v nil :c 1 :algorithm :l2l2) (evaluate-model "w50" "resources/gold-standard-1.csv")

True positive: 23 False positive: 7 True negative: 303 False negative: 3 Precision: 0.76666665 Recall: 0.88461536 Accuracy: 0.9702381 F1: 0.82142854

(evaluate-model "w50" "resources/gold-standard-2.csv")

True positive: 23 False positive: 6 True negative: 314 False negative: 2 Precision: 0.79310346 Recall: 0.92 Accuracy: 0.9768116 F1: 0.8518519

The trend continues: a decline in precision, while there are increases in recall. Overall F1 score is better in both cases. Let's try with a weight of 200

Weight 200

(train-svm-model "svm.w200" "resources/svm/base/" :weights {1 200.0} :v nil :c 1 :algorithm :l2l2) (evaluate-model "w200" "resources/gold-standard-1.csv")

True positive: 23 False positive: 7 True negative: 303 False negative: 3 Precision: 0.76666665 Recall: 0.88461536 Accuracy: 0.9702381 F1: 0.82142854

(evaluate-model "w200" "resources/gold-standard-2.csv")

True positive: 23 False positive: 6 True negative: 314 False negative: 2 Precision: 0.79310346 Recall: 0.92 Accuracy: 0.9768116 F1: 0.8518519

Results are the same, it looks like improving the weights up to a certain point adds further to the predictive power. However, the goal of this use case is not to be an SVM parametrization tutorial. Many other tests could be done such as testing different values for the different SVM parameters like the C parameter and others.

Improving Performance Using New Music Domain Documents

Now let's see if we can improve the performance of the model even more by adding new documents that belong to the class we want to define in the SVM model. The idea of adding documents is good, but how may we quickly process thousands of new documents that belong to that class? Easy, we will use the KBpedia Knowledge Graph and its linkage to entities within the KBpedia knowledge structure to get thousands of new documents highly related to the music domain we are defining.

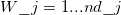

Here is how we will proceed. See how we use the type relationship between the classes and their individuals:

The millions of completely typed instances in KBpedia enable us to retrieve such large training sets efficiently and quickly.

Extending the Music Domain Model

To extend the music domain model we added about 5000 albums, musicians and bands documents using the relationships querying strategy outlined in the figure above. What we did was to add three (3) new features, that result in actually adding thousands of new training documents in the corpus.

What we do to extend the domain model is to:

- Extend the domain pages with the new entities

- Cache the new entities' Wikipedia pages

- Build a new semantic interpreter that takes the new documents into account, and

- Build a new SVM model that uses the new semantic interpreter's output.

(extend-domain-pages-with-entities) (cache-corpus)

(load-dictionaries "resources/general-corpus-dictionary.csv" "resources/domain-corpus-dictionary--extended.csv") (build-semantic-interpreter "domain-extended" "resources/semantic-interpreters/domain-extended/" (distinct (concat (get-domain-pages) (get-general-pages)))) (build-svm-model-vectors "resources/svm/domain-extended/")

Bingo! it is that easy to significantly expand our training basis.

Evaluating the Extended Music Domain Model

Just like what we did for the first series of tests, we now will create different SVM models and evaluate them. Since we now have a nearly balanced set of training corpus documents, we will test much smaller weights (no weight, and then 2 weight).

(train-svm-model "svm.w0" "resources/svm/domain-extended/" :weights nil :v nil :c 1 :algorithm :l2l2) (evaluate-model "w0" "resources/gold-standard-1.csv")

True positive: 20 False positive: 12 True negative: 298 False negative: 6 Precision: 0.625 Recall: 0.7692308 Accuracy: 0.9464286 F1: 0.6896552

(evaluate-model "w0" "resources/gold-standard-2.csv")

True positive: 18 False positive: 17 True negative: 303 False negative: 7 Precision: 0.51428574 Recall: 0.72 Accuracy: 0.93043476 F1: 0.6

As we can see, the model is scoring much better than the previous one when the weight was zero. However, it is not as good as the previous one when weights were modified. Let's see if we can benefit by increasing the weight for this new training set:

(train-svm-model "svm.w2" "resources/svm/domain-extended/" :weights {1 2.0} :v nil :c 1 :algorithm :l2l2) (evaluate-model "w2" "resources/gold-standard-1.csv")

True positive: 21 False positive: 23 True negative: 287 False negative: 5 Precision: 0.47727272 Recall: 0.8076923 Accuracy: 0.9166667 F1: 0.59999996

(evaluate-model "w2" "resources/gold-standard-2.csv")

True positive: 20 False positive: 33 True negative: 287 False negative: 5 Precision: 0.3773585 Recall: 0.8 Accuracy: 0.8898551 F1: 0.51282054

Overall the models seems worse with weight 2. OK, then, let's try with weight 5:

(train-svm-model "svm.w5" "resources/svm/domain-extended/" :weights {1 5.0} :v nil :c 1 :algorithm :l2l2) (evaluate-model "w5" "resources/gold-standard-1.csv")

True positive: 25 False positive: 52 True negative: 258 False negative: 1 Precision: 0.32467532 Recall: 0.96153843 Accuracy: 0.8422619 F1: 0.4854369

(evaluate-model "w2" "resources/gold-standard-2.csv")

True positive: 23 False positive: 62 True negative: 258 False negative: 2 Precision: 0.27058825 Recall: 0.92 Accuracy: 0.81449276 F1: 0.41818184

The performances are just getting worse. But this makes sense at the same time. Now that the training set is balanced, there are many more tokens that participate into the semantic interpreter and so in the vectors generated by it and used by the SVM. If we increase the weight of a balanced training set, then this intuitively should re-unbalance the training set and worsen the performances. This is what is apparently happening.

Re-balancing the training set using this strategy does not look to be improving the prediction model, at least not for this domain and not for these SVM parameters.

Improving Using Manual Features Selection

So far, we have been able to test different kinds of strategies to create different training corpuses, to select different features, etc. We have been able to do this within a day, mostly waiting for the desktop computer to build the semantic interpreter and the vectors for the training sets. It has been possible thanks to the KBpedia knowledge graph that enabled us to easily and automatically slice-and-dice the knowledge structure to perform all these tests quickly and efficiently.

There are other things we could do to continue to improve the prediction model, such as manually selecting features returned by KBpedia. Then we could test different parameters of the SVM classifier, etc. Such tweaks are the possible topics of later use cases.

Multiclass Classification

As we saw, we can easily define domains by selecting one or multiple KBpedia reference concepts and all of their sub-classes. This general process enables us to scope any domain we want to cover. Then we can use the KBpedia knowledge graph's relationship with external data sources to create the training corpus for the scoped domain. Finally, we can use SVM as a binary classifier to determine if an input text belongs to the domain or not. However, what if we want to classify an input text with more than one domain?

This can easily be done by using the one-vs-rest (also called the one-vs-all) multiclass classification strategy. The only thing we have to do is to define multiple domains of interest, and then to create a SVM model for each of them. As noted above, this effort is almost solely one of posing one or more queries to KBpedia for a given domain. Finally, to predict if an input text belongs to any of each domain models we defined, we need to apply an SVM option (like LIBLINEAR) that already implements multi-class SVM classification.

Conclusion

This use case tests multiple, different strategies to create a good prediction model using SVM to classify input texts into a music-related class. We tested unbalanced training corpuses, balanced training corpuses, different set of features, etc. Some of these tests improved the prediction model; others made it worse. The key point that should be remembered is that any machine learning effort requires bounding, labeling, testing and refining multiple parameters in order to obtain the best results. Use of the KBpedia knowledge graph and its linkage to external public datasets enables us to now do these previously lengthy and time-consuming tasks quickly and efficiently.

Within a few hours, we created a classifier with an accuracy of about 97% that classifies input text to belong to a music domain or not. We demonstrate how we can create such classifiers more-or-less automatically using the KBpedia Knowledge Graph to define the scope of the domain and to classify new text into that domain based on relevant KBpedia reference concepts. Finally, we note how we may create multi-class classifiers using exactly these same mechanisms.